Wellcome Collection, London

This Open for Business event was linked to the INI programme on Heavy Tails in Machine Learning and took place within the workshop on Stochastic Gradient Descent (SGD): Stability, Momentum Acceleration and Heavy Tails, and developed in partnership with the Alan Turing Institute. It aimed to provide a meeting ground to facilitate interactions and exchanges between representatives of academia, research and industry, relevant to the theme, with the objective of identifying points of mutual interest and possible co-activity.

Background

This OfB event discussed cutting-edge research in data science, where heavy-tailed distributions and differential privacy converge. This session bridged theoretical insights with practical applications, shedding light on the role of heavy-tails in machine learning and of differential privacy in data protection compliance, thus shaping the future landscape of data-driven decision-making.

Heavy-tailed distributions are often associated with observations that deviate significantly from the mean, making them suitable for modelling phenomena with outliers. This association has led the machine learning and statistics communities to typically view heavy-tailed behaviours in a negative light, attributing them to the creation of outliers or numerical instability, however, heavy tails are pervasive across various domains.

Many natural systems exhibit heavy-tailed characteristics, which fundamentally shape their properties. Recent studies in machine learning have revealed that the presence of heavy tails is intricately linked to the stability, geometry, topology, and dynamics of the learning process. Heavy tails naturally manifest during machine learning training and have been proven to enhance the performance of machine learning algorithms. This event aims to facilitate the exchange of ideas at the intersection of applied probability, optimization, and theoretical machine learning, focusing on practical problems where heavy tails, stability, or topological properties of optimisation algorithms play a crucial role.

Differential privacy holds significant relevance for businesses as it enables them to adhere to data privacy regulations like GDPR and CCPA while still retaining their capacity to analyse customer behaviour effectively. Non-compliance with these regulations can lead to substantial fines. As per a 2021 report by global law firm DLA Piper, fines totalling €273 million have been levied under GDPR since May 2018. These fines are projected to escalate as nations implement more thorough, automated methods to ensure GDPR compliance. The objective of this session was to foster an exchange of ideas between differential privacy guarantees developed in academia and real-world difficulties that arise when implementing them.

Aims & Objectives

The planned activities of the day geared towards this goal include a session of talks from industry and academia based on the themes of:

- Heavy Tails

- Differential Privacy

And two moderated discussions:

-

Heavy Tails and Clipping: Benefits and Difficulties.

-

Differential Privacy: Strategies, Benefits and Limitations.

The event ran from 10.00 – 16.00. All timings are GMT.

Registration and Venue

Registrations to this event are now closed, for any questions, please contact gateway@newton.ac.uk

The workshop took place at the Wellcome Collection. Please visit the Wellcome Collection website for further information about the venue.

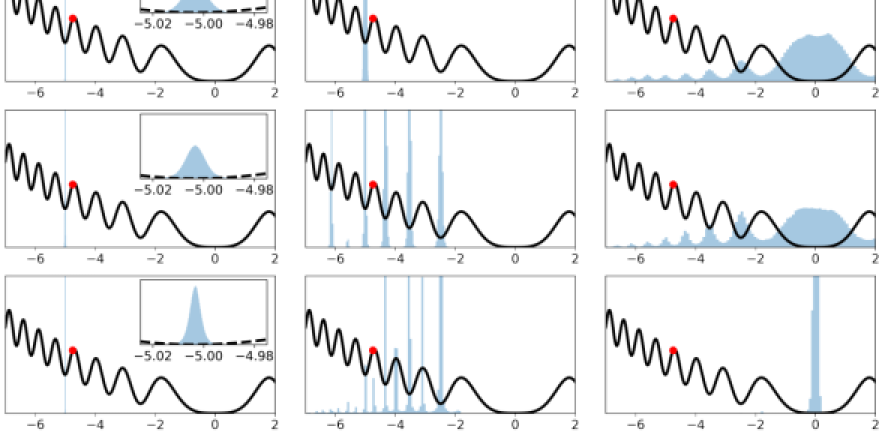

The image on this website belongs to Michael Mahoney and Liam Hodgkinson as presented in the paper Multiplicative noise and heavy tails in stochastic optimization.